Artificial intelligence (AI) is revolutionizing IT security – and posing new challenges at the same time. While AI systems help to detect and analyze threats more quickly, they also bring New risks that companies should not underestimate.

What makes AI a danger?

AI systems are complex, data-driven and often difficult to understand. It is precisely these characteristics that make them susceptible to Manipulation, misuse and wrong decisions. The risks can be roughly divided into three categories:

- Vulnerable training data

AI learns from data – and if this is manipulated, the system can make the wrong decisions. For example, an AI-based intrusion detection system (IDS) that has been trained with falsified logs can no longer reliably detect real attacks. - Adversarial attacks

These are targeted inputs that mislead AI systems. Even minimal changes in a data packet or image can result in an AI model failing to recognize a threat – or incorrectly classifying harmless activities as an attack. - Black box decisions

Many AI models are not transparent. When a system makes a safety-relevant decision, it is often impossible to understand why. This makes audits, compliance and troubleshooting more difficult.

Concrete cases from practice

- DeepLocker (IBM Research): A proof-of-concept for an AI-based malware that only activates under certain conditions – for example, when it recognizes a certain face. This type of targeted malware shows how AI can be used to disguise and control attacks.

- Tesla Autopilot: AI-based systems for image recognition have been fooled several times by manipulated traffic signs or markings – an example of adversarial attacks with real consequences.

- Deepfake technology: AI-generated videos and voices are increasingly being used for social engineering and CEO fraud – sometimes with enormous financial losses.

What companies can do

- Test and validate AI systems regularly

- Creating transparency through explainable AI (XAI)

- Establish safety mechanisms for AI models too

- Creating awareness – also among managers

Conclusion

AI is a powerful tool – but also a potential gateway for new threats. Companies need to learn, not only with AI, but also protect AI itself.

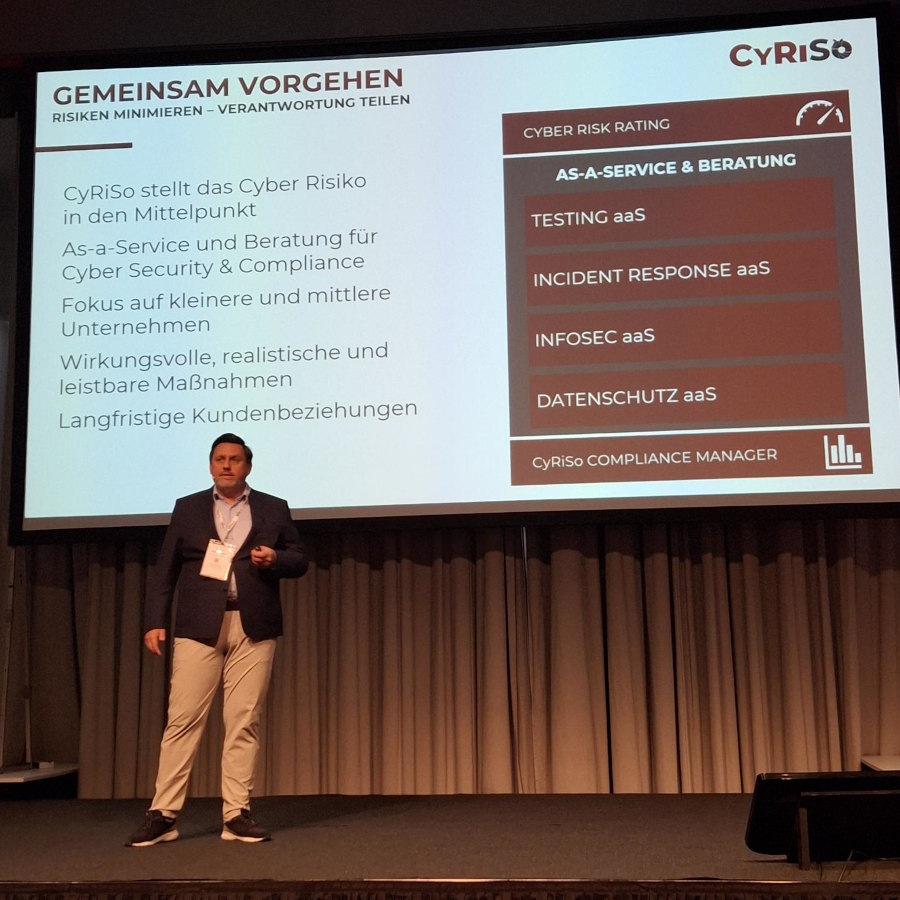

🔎 How well prepared is your company for AI risks?

Use the free Cyber Check on cyriso.ioto evaluate your security strategy or contact us.